Artificial intelligence’s (AI) reach into today’s technology, brands, and products is completely seamless. It plays a role behind-the-scenes in many tools, apps, and services that we use every day.

We recently posted about AI used in mobile advertising technology, the huge payouts AI experts and engineers are receiving, and the smart home war between Amazon and Google. But these just skim the surface of an AI renaissance.

The Ancient History of AI

Humans were interested in creating AI applications ever since we started learning programming. In fact, being able to code really sped up the creation of theories in the AI field. Bet you didn’t know this — the idea of a self-conscious, albeit subservient, machine was already twirling around in the 14th century.

Ramon Lull, a Spanish theologian and poet, invented machines for discovering new knowledge by combining concepts and ideas. It wasn’t until 300 years later that mathematician Gottfried Leibniz came along to flesh out what Lull was saying — yes, Leibniz elaborated, all ideas are nothing more than a bunch of smaller ideas put together.

Another 100 years later, Thomas Bayes developed the framework for reasoning through the probability of events. This becomes a cornerstone of machine learning, a subset of AI in which machines learn and come to a conclusion about data it takes in. Another 100 years later, George Boole argues these qualitative ideas could be boiled down into systematic equations, setting up the idea that programming could accelerate the applications of AI.

50 years later, in 1898, Nikola Tesla revealed the world’s first radio-controlled vessel. He proclaimed it was equipped with “a borrowed mind”. After that, the word “robot” and a few early iterations of robots popped up.

From here, it takes another 50 years until Warren S. McCulloch and Walter Pitts publish a paper in a mathematical biophysics journal about computer-based “neural networks” that “mimic the brain”. This theory gets fleshed out over the next 100 years.

In 1950, Alan Turing proposes “the imitation game”, which asked people to consider if a human cannot tell the difference between another human and a computer, then the computer must hold “human intelligence”. This launched the challenge of “natural language processing” into the forefront.

In 1955, the term “artificial intelligence” was officially recorded in a proposal for a two-month, ten-man study of AI. Later that year, Herbert Simon and Allen Newell developed the first AI program, which ultimately proved 38 of the first 52 theorems in Whitehead and Russell’s Principia Mathematica.

Four years later, in 1959, Arthur Samuel records the term “machine learning,” which he used to refer to a computer taught to play checkers. The computer should play checkers better than the human who programmed it.

And though Herbert Simon, in 1965, predicted that “machines will be capable of doing any work a man can do within twenty years,” Hubert Dreyfus was arguing that the human mind and AI were not completely interchangeable. Dreyfus believed there were limits AI couldn’t reach.

The next 35 years included many improvements and refinements to the AI field by Japanese researchers and engineers. Unfortunately, during this time, governments around the world drastically reduced funding for AI research.

In the 2000s, tech giants like Google and larger, private universities, like Carnegie Mellon, jump on the AI train. In 2011, IBM’s Watson defeats humans on Jeopardy! Ever since then, AI has been a consistent headline maker.

Know Your AI

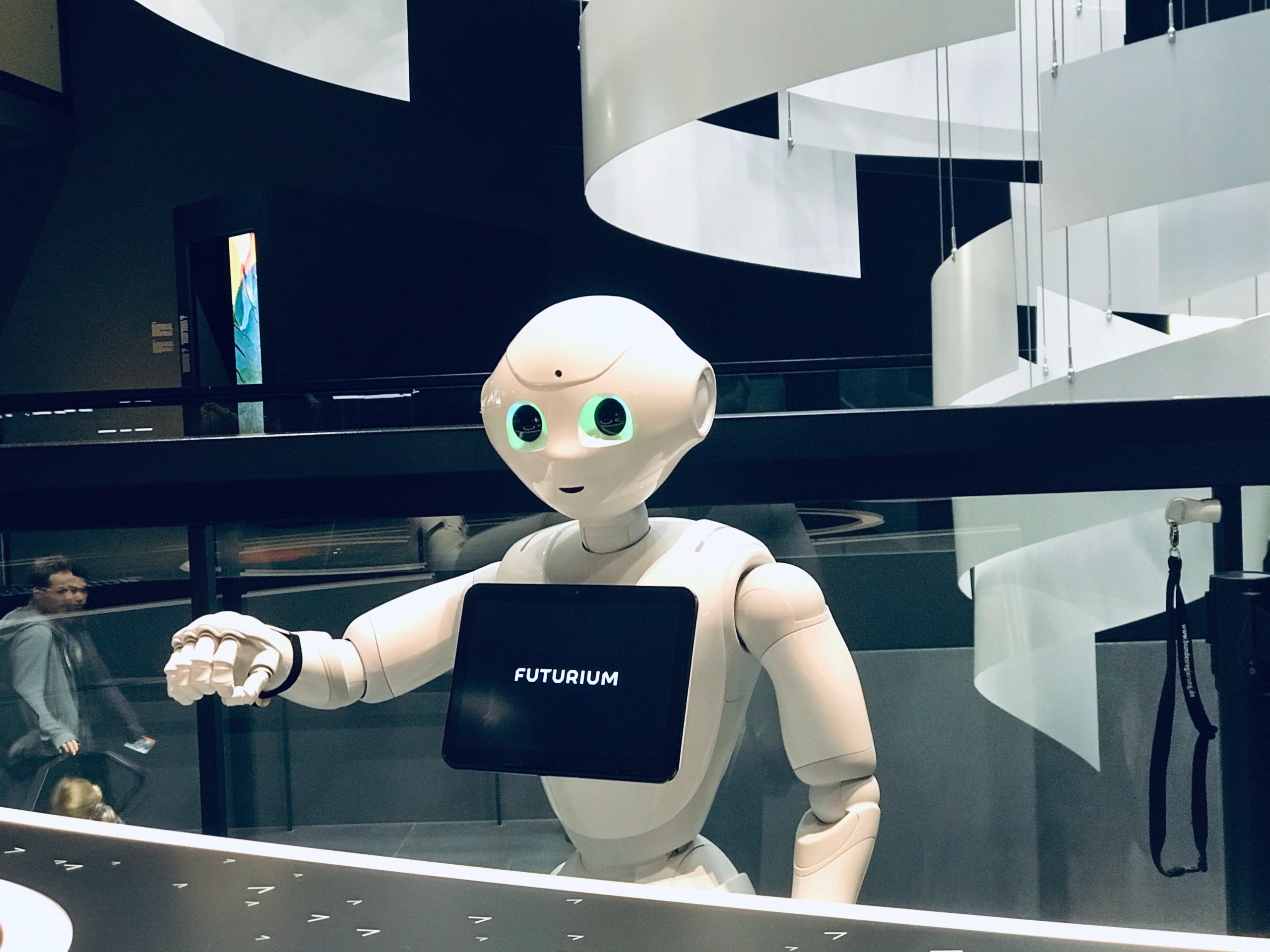

There are four types of AI. They range in skillset and “consciousness”. Type I specializes in one area, and it acts on what it sees. This is called “purely reactive” AI.

Type II is a little more complex. Known as “limited memory,” it stores some memory of past experiences to inform its real-time decision making. Examples of this AI in action include self-driving cars.

Type III includes R2-D2 from Star Wars. While we haven’t reached this level of AI yet, this machine knows how to understand thoughts and emotions that can influence human behavior. It can interact socially, and it totally knows what motives and intentions entail. This type of AI is known as “theory of mind.”

Type IV is the pinnacle of AI. We don’t know yet what this type of robot could really encompass. The potential and fear surrounding this one are unfathomable; this type of AI usually appears in stories trying to warn us that machines could become too smart. An example is Eva from Ex Machina. Eva had a plan all along in the movie and was saying things she knew would influence the thoughts and actions of the main characters. Type IV AI is called “self-awareness.”

The Beginning of a Renaissance

The basis of AI lies in machine learning, natural language processing, data science, and neural networks. With these tools, the computer has a lot to learn from, experience, and plan for. With mobile app developers integrating AI into their apps, we’re starting to reap the rewards of AI from the palm of our hands. But don’t expect AI to stop expanding there. It will become a part of the subway commute, your fast food ordering process, an essential component of education, and much, much more.

While Simon was predicting a complete machine takeover by 1985, we know better today. There are so many facets to our thoughts that programming them into a machine seems incomprehensible at the moment. Even if we could manage to do that, the specific flowchart or order of thought could cause issues with the machine. MIT brings this up this uncertainty in a recent article.

Although gloomy headlines constantly prop up the idea of a self-aware AI apocalypse for attention, our future with AI appears to be much more positive. And it’s just beginning. AI is already being applied to productivity, logistics, and high-level decision-making in various industries. It’s even being used to improve its own capabilities. Google’s developers in San Francisco are currently working on AI that will be able to build new AI.

Technology continues expanding at an unbelievable accelerated rate. Although we do benefit from a website suggesting matching pants to a shirt, AI is capable of so much more than we could know right now. So while it doesn’t pay to be fretful of the future, it is prudent to be aware of the inherent changes that AI disruption will bring to how we live. Are you aware?