Artificial intelligence (AI) has been expanding its skillset and improving its performance in a variety of industries. It’s become an integrated part of image processing and identification, healthcare diagnosing, automatic translation, autonomous cars, and speech analysis. But these advancements beg the question: when will AI reach its full potential? When it’s infiltrated every part of our lives and the world around us? When it no longer has any challenges to overcome?

Artificial intelligence (AI) has been expanding its skillset and improving its performance in a variety of industries. It’s become an integrated part of image processing and identification, healthcare diagnosing, automatic translation, autonomous cars, and speech analysis. But these advancements beg the question: when will AI reach its full potential? When it’s infiltrated every part of our lives and the world around us? When it no longer has any challenges to overcome?

Alan Turing, the London-born math, computer science, and philosophy prodigy, speculated about this almost a century ago. He concluded (and proved) that there would be computations that would never finish completing, while others might take years or centuries to finish. However, we know that reality and theory, although closely related, are not always indicative of one another, and AI could push the boundaries of what we think is possible.

A Theory of Mind, Not Computer Science

One of those decade-long solutions to a problem is when we ask a supercomputer to compute the possible combinations of future moves in a chess game. It doesn’t take much time to calculate a few moves ahead, but the problem arises when you ask it to figure out all of the moves until the end of an 80-moves chess game. A year after programming the computer, it would still only have explored a small part of the chessboard. This is an issue caused by scaling up a simple problem.

Decades ago, AI did well at smaller games, but it had difficulties scaling up to larger games like chess. But modern-day AI has used a variety of mathematical concepts and machine learning development techniques to jump over this hurdle. It can now beat the world’s best Go player by looking many more moves ahead than the human player could ever manage to. But if we look closely at the scaling-up problem, it’s more of a computer science problem than an AI limitation.

The ultimate goal for AI is to perform seamless human-computer interaction. It must be able to take a variety of feedback and adjust its performance accordingly, it must act intelligently, it needs to communicate clearly, and if at all possible, it needs to be interactive, friendly, and even social. So how do we scale up the AI far from playing games as a computer screen to a “normal-looking” intelligent technology? We want AI to eventually act like a human being, one that remembers your past conversations, one that understands belief systems, can read in between the lines, and identify intentions when someone is speaking.

The psychological term for this is “theory of mind”, and it refers to someone knowing that the person they’re interacting with has their own thoughts and experiences, sees the world around you, and wants to connect and relate with you. With the theory of mind, the person should be able to map the other person’s thoughts, experiences, and intentions to their own.

The Self Model

The issue is that AI applications are still mainly screen-based. They are integrated into chatbots that we access through a computer screen, for example. But for an AI to truly have a conversation and pick up all of the nuances of a conversation, like signals, gestures, and unspoken intentions, it needs to have a physical body. And it needs to be aware of its physical body, not only in relation to the other person but also in relation to the world. Human children slowly work their way to such a mental model, so it’s not completely out of the question to program it into an AI.

Social interaction depends on every party having a “sense of self” while maintaining a mental model of the other parties. For an AI, a sense of self would include an understanding of how its body operates, a map of the space it’s in, a catalog of skills and actions, the ability to learn more, and a subjective perspective that could be updated and changed based on a conversation.

As we know, AI can be ingrained with the same biases of its designers and developers. So an AI built with experiences needs to understand the experiences it was programmed with, and it also needs to add new experiences to its memory — just like a human. Thus, to truly relate to and connect with humans, an AI needs a physical body.

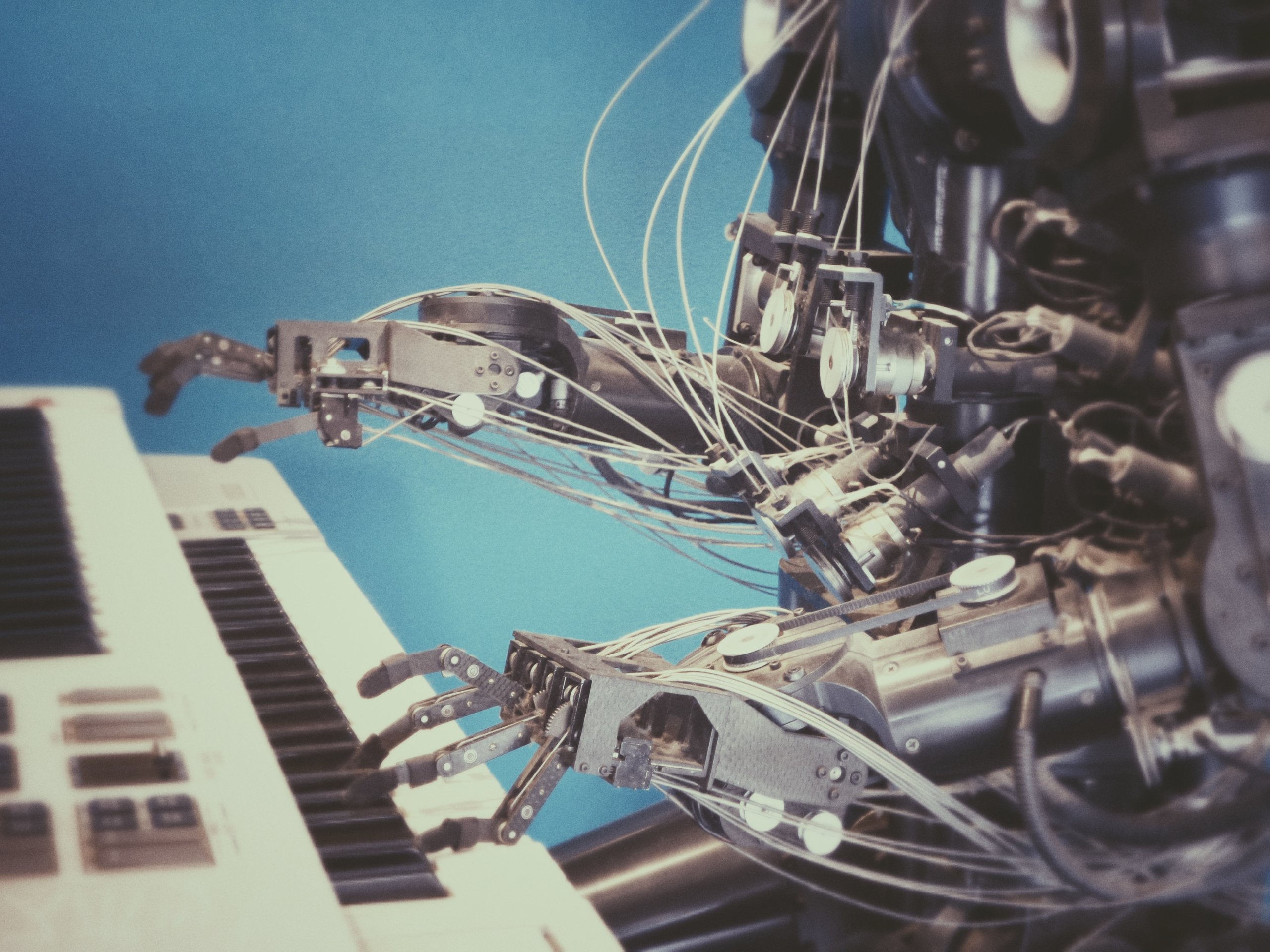

Perception and Movement

It’s possible that we develop AI-enhanced robots that follow the developmental and growth patterns of human infants and children. It takes years for children to learn how to touch, feel, and act, not to mention learning about the consequences of actions. There is a massive amount of research and first-hand observation that can be done on human children to inform how we built these AI robots with physical bodies.

These days, research teams are experimenting around mimicking infancy in robots, allowing the robot infant to learn from its caregiver, learn about its surroundings, teach itself about the physics of the world around it, and continue to learn and grow based on actions, consequences, and conversations. We’ll have to see how these experiments turn out, but one thing is for sure: the future of AI is not in embedded systems; it’s in embodied systems.