The field of artificial intelligence (AI) has grown so much that its vast potential makes it seem like the perfect tool for myriad medical tech applications. But how close is the hype to reality?

The field of artificial intelligence (AI) has grown so much that its vast potential makes it seem like the perfect tool for myriad medical tech applications. But how close is the hype to reality?

With the COVID-19 pandemic, there is growing hope that AI can be used for patient screening, disease identification, and automation of paperwork. This could free up crucial time and resources for overworked hospital staff. But an analysis by Google Health showed that AI can be pinpoint-accurate in a lab but still perform horribly in a real clinical setting.

A Real-Life Lesson for Google Health

The team at Google Health learned that AI needs to be uniquely tailored to the clinical environment where they’ll eventually end up. And there is no set of universal guidelines; hospitals and medical facilities all differ in their data entry, data points, and patient processes. Knowing the nuances is extremely important if an AI is to succeed in these environments.

To obtain FDA clearance or approval by the European Union, a medical AI application must reach a certain level of accuracy. But not one regulatory body has enacted rules on improving the outcome for patients. Besides being accurate, Emma Beede, a UX researcher at Google Health, says that AI should also be patient-centric. Unfortunately, it seems that the team at Google Health is having trouble enough figuring out the first factor.

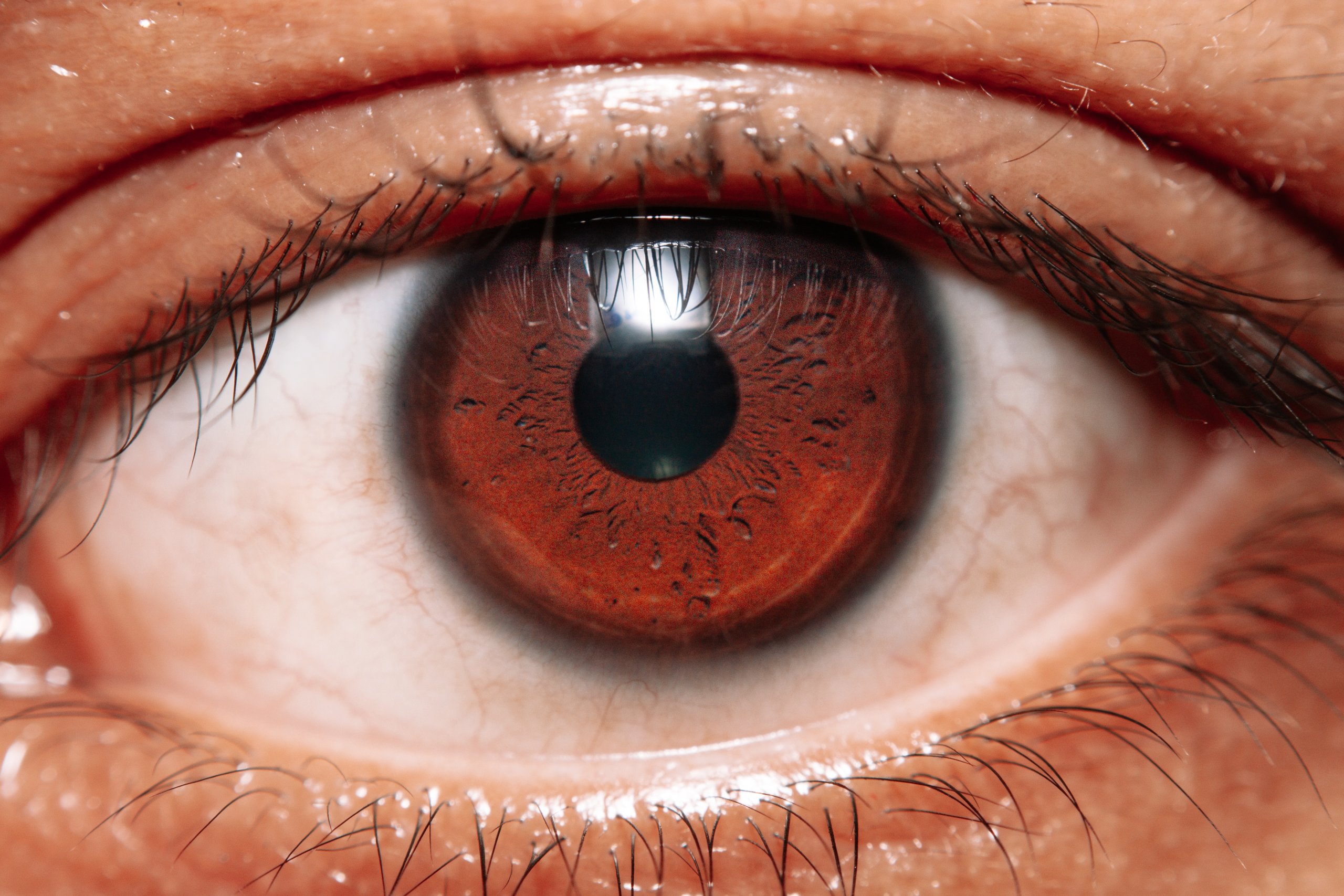

Diabetes can cause blindness, so Thailand’s national health ministry mandates that more than 60% of diabetic patients must be screened for diabetic retinopathy. However, Thailand has only 200 retinal specialists nationwide, serving a population of 4.5 million patients. This is where Google Health’s AI tool came in.

In a simulated environment, the AI was very successful in using deep learning to find signs of eye disease in diabetic patients. But, as 11 Thailand clinics that employed the AI found, real-world results painted a stark contrast to the tool’s lab performance.

Lapses In Training the AI

Prior to the AI, Thai nurses would take photos of patients’ eyes during their exams, and these photos would be sent to external specialists. But this process takes up to 10 weeks.

In the lab, the AI found signs of diabetic retinopathy from eye scans with over 90% accuracy rates. This rate is so good that it’s referred to as “human specialist level”, and the best part is that the AI only took 10 minutes to come up with results.

But without real-world testing, the Google Health team knew that the AI was unproven. They observed nurses while they were scanning patients’ eyes and interviewed them about their feelings regarding the new AI.

The feedback wasn’t positive. For example, the AI sometimes didn’t return a result at all. Because it was trained on many high-resolution images, if an image from a nurse didn’t have enough definition, it was rejected by the deep learning algorithm.

The Thai nurses were scanning dozens of patients per hour, and the photos were often taken in poor lighting. This meant that more than 20% of images ended up being automatically rejected by the AI.

A Patient Experience In Need of Improvement

If a patient was part of the unlucky 20%, they were told to visit another specialist at another clinic on another day. For many of these patients who are extremely poor and cannot afford to take time away from their jobs, it was incredibly frustrating and inconvenient.

In this situation, the nurses were completely powerless because after checking the images themselves to find no obvious signs of disease, there was nothing they could do to help the patient. Many nurses even tried to retake the scan for the patient, to no avail.

In San Francisco, the Google Health team likely had a fast, stable, and strong Internet connection. Part of the AI’s workflow is to upload images to the cloud for processing. But in Thailand, poor internet connection is the norm, and it caused massive delays as a result.

Patients had to wait at the clinic for their test results, leading many to start complaining. One nurse said, “They’ve been waiting here since 6 a.m., and for the first two hours we could only screen 10 patients.”

To remedy the AI’s strict rules and workflow, the Google Health team is building more flexibility into the AI. Nurses can use their own judgment for cases that are borderline. The model can accept lesser-quality images.

Prioritize Patients, Not Experiments

Hamid Tizhoosh works on AI for medical imaging at the University of Waterloo. He says that researchers are rushing to apply AI to COVID-19, but many of them don’t have any healthcare training or expertise. The Google Health experiment is a timely reminder of the fragility of an untested emerging technology in the real world. Tizhoosh says, “This is a crucial study for anybody interested in getting their hands dirty and actually implementing AI solutions in real-world settings.”

Michael Abramoff agrees with Tizhoosh’s sentiment. In fact, he says, if people have multiple bad experiences with AI, it will create a public backlash to the technology and any new applications. Abramoff says it’s extremely important to test and tweak the AI to fit the real-world clinical setting and the workflows they employ on a day-to-day basis.

“There is much more to healthcare than algorithms,” says Abramoff. Comparing AI to human specialists is not very useful, especially when it comes to accuracy. Because doctors discuss diagnoses, often disagreeing with each other, the AI needs to be flexible enough to fit into that type of collaborative environment.

Small Wins In Sight?

When an already high-performing algorithm gets adjusted to fit into a specific clinical setting, we experience a much better patient experience, smoother workflow, and more time savings. Beede says that she saw first-hand how the AI helped people who were already good at their jobs. One nurse, in particular, “screened 1,000 patients on her own, and with this tool she’s unstoppable.”

At the end of the day, Beede said, “The patients didn’t really care that it was an AI rather than a human reading their images. They cared more about what their experience was going to be.”

This is an excellent reminder of the promise that AI brings to healthcare. But one must not forget that outcomes greatly shape experiences, so much so that they can even redefine them. And we’re sure that the 20% of patients who walked away with no results from the trial would agree.

Hopefully, the feedback that Google Health received from this study yields enough information for improvement in the next iteration of the AI algorithm.

What do you think about the tech titan’s tool for identifying diabetic retinopathy? As always, let us know your thoughts in the comments below!